The spark-testing-base is one of the main Spark testing libraries, so if you use Spark chances are very high that you previously encountered such a project.

Probably for historical reasons, most of the core API is based on the SparkContext. The SparkSession was introduced in Spark 2.0 to simplify a quite messy definition: i) SparkContext, ii) SQLContext wrapping it, iii) HiveContext wrapping both;

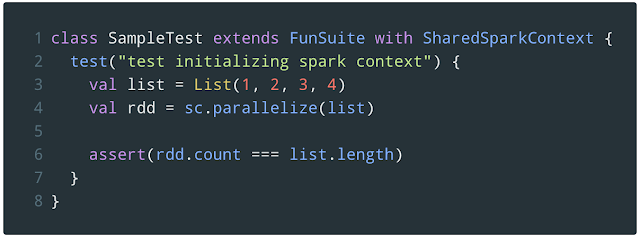

The simplest access to Spark from a test is the SharedSparkContext:

However, in most cases you will be interacting with a DataFrame rather than an RDD.

Accordingly, a DataFrameTestSuite is introduced to provide a SparkSession:

If you look at the actual implementation of the SharedSparkContext, there are various things you may notice:

|

| https://github.com/holdenk/spark-testing-base/blob/master/core/src/main/2.0/scala/com/holdenkarau/spark/testing/SharedSparkContext.scala |

So the trait is extending the BeforeAndAfterAll trait from Scala test, in order to destroy existing context and initalize a new one before any test starts, as well as destroy it after all are completed. Specifically, the SparkContext is defined in the private transient var named _sc and returned to implementing test classes using a def. If you remember from the official guide (e.g., here and here), the main difference between val and var is that val is a constant reference whereas var is a reference variable and can therefore be assigned (i.e. overwritten). In addition, a def defines a method and as such it can not be re-assigned. Hence, the SparkContext sc def dynamically binds to the current value of the private member _sc. If we implemented the SparkSession in the same way (with a private member and a def retrieving it), we would have, for instance, that the sqlContext.implicits._ would not be importable.

A possibility to circumvent this problem is to assign the sqlContext to a val before importing its implicit, similarly to the example with the DataFrameSuite.

To solve this problem, a possibility is to statically define the session as an immutable val, although delaying its actual setting to the first time it is called/used using a lazy val.

Consequently a SharedSparkSession extension to the existing SharedSparkContext could be implemented this way:

Andrea

No comments:

Post a Comment